How to convert natural language text to SQL using LangChain

In this post, we're going to look at how you can use LangChain and OpenAI's GPT model to convert natural language queries to SQL, execute them, and get an answer from your database in plain English.

Introduction

LangChain is a powerful framework for building LLM powered applications. In this post, we're going to go over and cover:

- The Basics of LangChain

- Installing LangChain using Python

- Creating Chains using SQL data

- Prompting Large Language Models (GPT-3.5)

- And more!

Use cases

In my opinion the most relevant use cases for generating and executing SQL queries using Natural Language with LangChain are:

- Creating chatbots that can answer questions based on database data

- Generating complex queries based on natural language

- Expanding database access to non-technical people and stakeholders

SQL Query building

SQL query building simply refers to the process of generating a SQL query from a question or an input. It involves writing the necessary statements that specify the desired data, conditions, and operations to be performed on the database. It starts with a prompt, then that prompt is converted into a SQL statement. This makes it really easy to generate queries based on questions or requirements instead of manually writing complex INNER and OUTER joins.

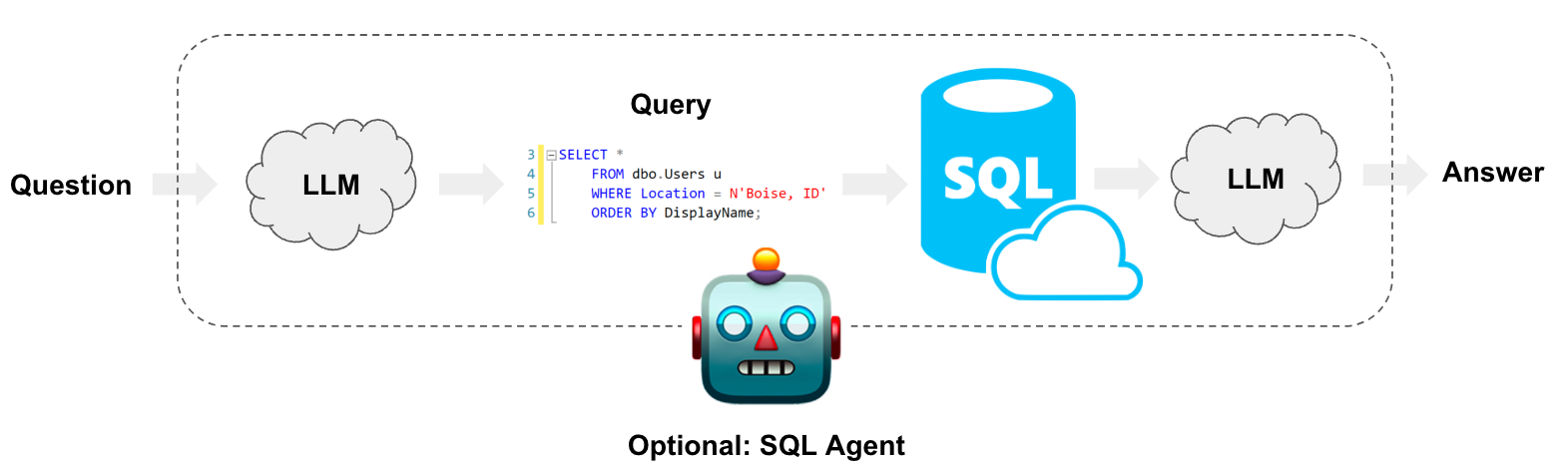

As you can see from the diagram above, it starts with a natural language prompt (common English) which could be a question, such as: How many users with a Gmail email address? which is then converted to a SQL statement. This gets executed by the SQL Engine, the response is then sent back to the LLM and a natural language answer is generated.

Awesome, non-technical stakeholders asking for specific metrics can directly ask the database as they don't need to have a deep understanding of SQL. More importantly, using natural language will improve efficiency due to the speed at which questions can be turned into answers vs. having someone on the dev team write the query, clean the data, convert to Excel and send to stakeholders for example.

Overall, this makes SQL databases easy to work with and data retrieval and analysis more accessible to a wider range of users.

Querying a SQL Database with Chains

Let's suppose you have a SQL Database hosted on RDS. It stores expense transaction information for your users. If you were to retrieve the total spend for a specific user, you'd need to write a SQL query similar to this one: SELECT SUM(amount) from Expenses WHERE UserId = 1.

But instead using LangChain and LLMs you can run a chain that takes in natural language and then creates the SQL for you. So to create that same query you'll instead use: What's the total spend for user 1?

Similarly your users would be able to chat with their data instead of you having to build complex and unnecessary endpoints and interfaces.

Text-to-SQL Query

In this code example, we'll see how we can create our SQL statement from text without execution:

from langchain.chat_models import ChatOpenAI

from langchain.chains import create_sql_query_chain

chain = create_sql_query_chain(ChatOpenAI(temperature=0), db)

response = chain.invoke({"question":"How many employees are there"

print(response)Code sample of text-to-SQL query without execution

Text-to-SQL Query and Execution

To create and execute a query that will retrieve the number of employees from our SQL table using chains, and then execute it:

from langchain.llms import OpenAI

from langchain_experimental.sql import SQLDatabaseChain

llm = OpenAI(temperature=0, verbose=True)

db_chain = SQLDatabaseChain.from_llm(llm, db, verbose=True)

db_chain.run("How many employees are there?")Code sample of text-to-SQL query with execution

This code block will create a chain that builds the SQL query based on the user question and then execute it.

Installation & setup

To install LangChain, you need to follow these steps:

- Open your command prompt or terminal.

- Type the command:

pip install langchain langchain-experimental openaiand pressEnter - Wait for the installation process to complete.

Create project folder

We'll then need to create our Python file and add our OpenAI API Key. To do so you'll need to follow the steps below:

cdto your project folder.- Type the command:

touch app.py - If you're using VSCode you can then type

code .to load the contents of the folder.

Load OpenAI API key

- In your terminal type

touch .env - Open the

.envfile and typeOPENAI_API_KEY="..."then save. - Install

python-dotenvfrom terminal usingpipby running:pip install python-dotenv - Open

app.pyand add the following:

from dotenv import load_dotenv

# Load environment variables from the .env file

load_dotenv()app.py. It is generally best practice to do it like this.That's it! To recap:

- We installed required packages

- Created a project folder and

app.pyfile - Added our OpenAI API Key to our environment variables

Connecting to RDS instance

In our example, we're working with a Microsoft SQL Database, hosted on RDS. To set it up with LangChain, we'll need to use the SQLAlchemy wrapper exposed as SQLDatabase. Let's import it:

from langchain import SQLDatabaseHere's the database set up:

# Connection parameters

server = '***.rds.amazonaws.com'

database = '***'

username = '***'

password = '***'

port = '1234' # If you're using a custom port (Remove for default)

connection_string = f'mssql+pyodbc://{username}:{password}@{server}:{port}/{database}?driver=ODBC+Driver+18+for+SQL+Server&TrustServerCertificate=yes'

db = SQLDatabase.from_uri(connection_string)

TrustServerCertificate=yes just because I've had some issues while connecting using SSL. It might not be needed in your case.Preparing our LLM & Chain

from langchain.llms import OpenAI

from langchain_experimental.sql import SQLDatabaseChain

llm = OpenAI(model_name="gpt-3.5-turbo-16k-0613", temperature=0, verbose=True)

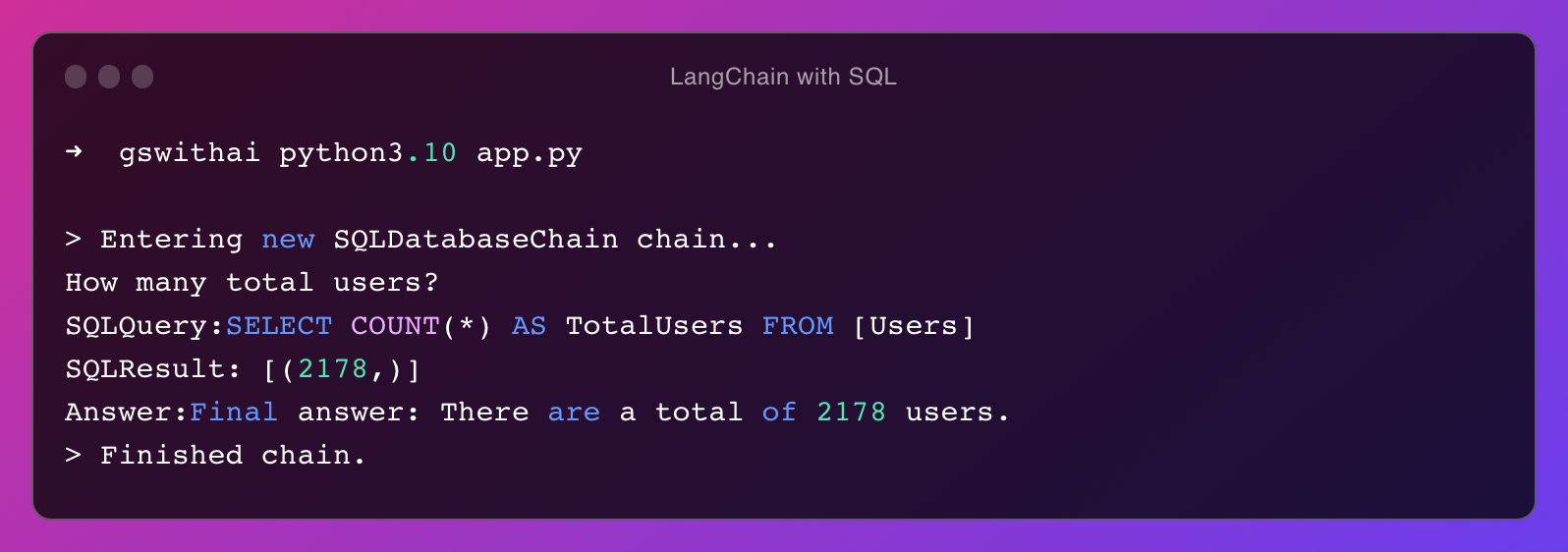

db_chain = SQLDatabaseChain.from_llm(llm, db, verbose=True)Ok, so as we've seen earlier, we're setting up our llm model by specifying the model_name, temperature and verbose parameters. Using SQLDatabaseChain tells LangChain to generate and execute the SQL simultaneously when we run this.

The temperature refers to the randomness of the model's output, 0 is the lowest value. Verbose on the other hand just prints out what LangChain is doing, instead of just generating the final answer to our query. Setting it to True will show the SQL query.

gpt-3.5-turbo-16k-0613 since it has a higher token capacity. I was getting token limitations errors with other models. This also might not be the case for you.Finally, we execute:

db_chain.run("How many total users?")Finally, in your terminal you can execute the code by typing python app.py. And would you look at that, it works like magic! 🪄

(Here's the overly used emoji in any AI-powered app nowadays: 🪄)

Great. I tried this with much more complex queries that included some nested SELECT statements and many joins. And most of the time, it works!

Source code for the geeks

Final thoughts

In this tutorial, I covered the basics of setting up LangChain and creating chains with SQL and OpenAI's LLM.

LangChain is a powerful tool that opens up a world of possibilities in building large language model powered apps. I believe we're going to see much more LLM apps in the near future, all sorts of startups and SaaS offerings.

Given that it's an easy-to-use framework with lots of capabilities, you can create autonomous agents, personal assistants, chatbots, and much more. I recommend you start playing around with LangChain today and recommend you also look into LlamaIndex. I've written a nice series of posts about the topic here:

- Introduction to Augmenting LLMs with Private Data using LlamaIndex

- Using Data Connectors to Build a Custom ChatGPT for Private Documents

- A Closer Look into Storage Customization, Persisting and Loading Data

Thank you for reading this tutorial. ❤️ As.you know, writing content takes a lot of time, and I do this so that we can both benefit. Therefore, if you enjoyed this post, go ahead and subscribe, it's completely free, and it takes less than a minute. You'll get early access to all my posts as well as my free newsletter and zero spam. Guaranteed.

I hope that the material I covered comes in handy in your journey of building amazing LLM-powered products! Let's connect on X (Formerly Twitter) and let me know if you have any comments below.

Happy coding!

Further readings

More from Getting Started with AI

- What is the Difference Between LlamaIndex and LangChain

- An introduction to RAG tools and frameworks: Haystack, LangChain, and LlamaIndex

- A comparison between OpenAI GPTs and its open-source alternative LangChain OpenGPTs