Add AI capabilities to your C# application using Semantic Kernel from Microsoft (Part 2)

In the second part of this tutorial, we will apply what we've learned in Part 1 and utilize the Semantic Kernel SDK in a simple C# console app.

Watch this tutorial on YouTube

Make sure you subscribe to the channel

Using the Semantic Kernel SDK

On my machine, I am running macOS Ventura on an Apple M1 Pro chip, but the same steps should work on any environment.

Prerequisites

- .NET Core / CLI

- Semantic Kernel SDK

- OpenAI API Key

- Visual Studio Code (or your preferred IDE)

Step 1: Create a new C# Console app

In your terminal window type the following: mkdir SKDemo && cd SKDemo. Then, create a new C# console app by typing: dotnet new console.

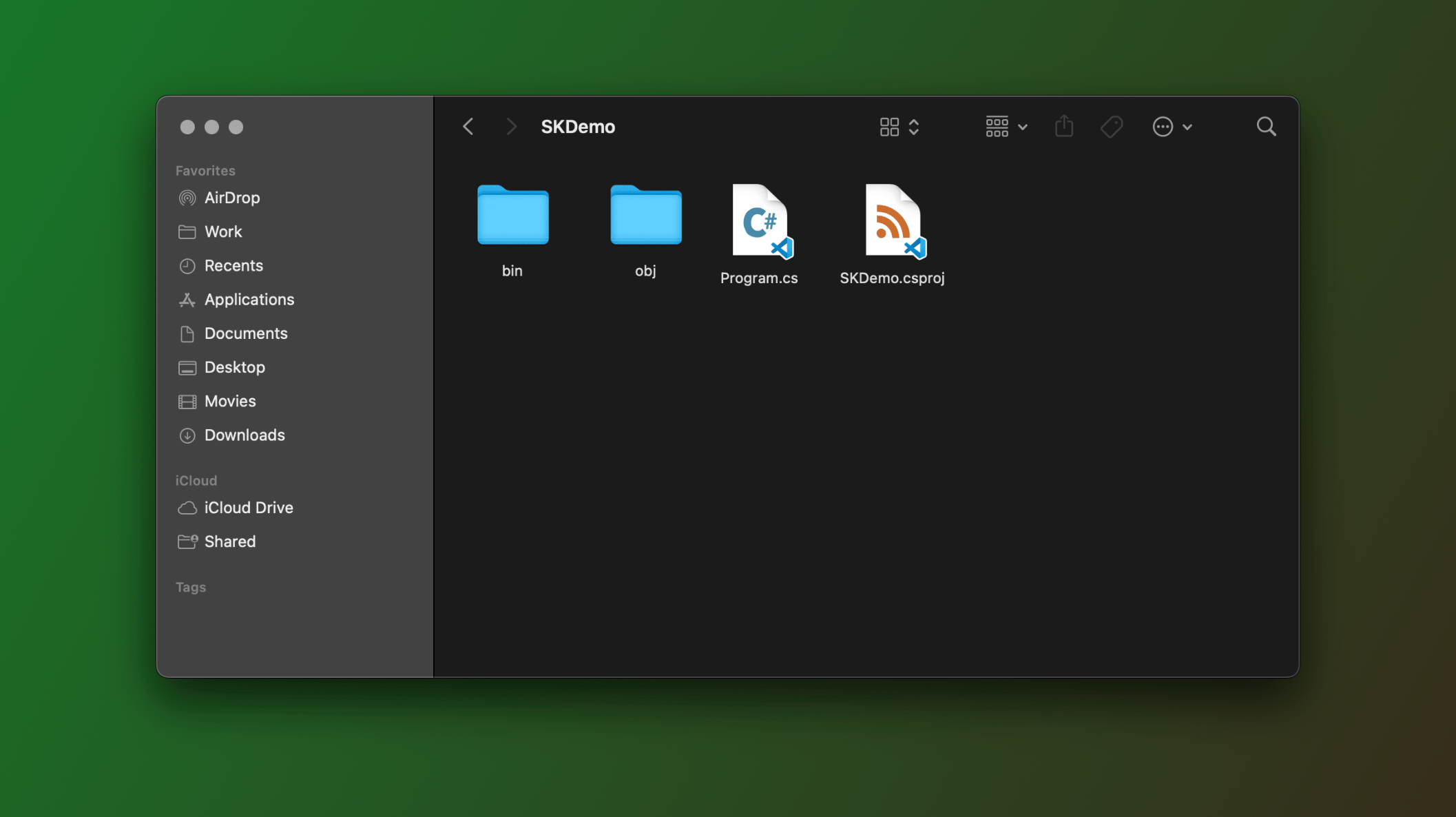

Now, your SKDemo root directory should look like this:

Install the Semantic Kernel SDK package from NuGet by entering the following command:

dotnet add package Microsoft.SemanticKernel --version 1.0.1

1.0.1 is the latest stable release. You may choose to install a newer version.In our Program.cs file, let's prepare a few things:

// Import the Kernel class from the Microsoft.SemanticKernel namespace

using Kernel = Microsoft.SemanticKernel.Kernel;

// Create a new Kernel builder

var builder = Kernel.CreateBuilder();

// Add OpenAI Chat Completion to the builder

builder.AddOpenAIChatCompletion(

"gpt-3.5-turbo",

"OPENAI_API_KEY");"OPENAI_API_KEY" with your actual OpenAI API Key. You can get it from the OpenAI website.We're now ready to start building our Plugins and Functions.

Step 2: Create the Plugins folder

Great, you've made it this far so you're serious about using Semantic Kernel. If you recall from Part 1 of this tutorial, we're going to build two plugins:

FactmanPlugin: Responsible for all myth-related tasks and will include the following functions:FindMyth: Finds a common myth about AI.BustMyth: Fact-checks and busts the myth.AdaptMessage: Adjust the content to align with the specific posting guidelines of a given social media platform.

SocialPlugin: Our second plugin will be used by the Kernel to simulate posting to social media platforms using only one function:Post.

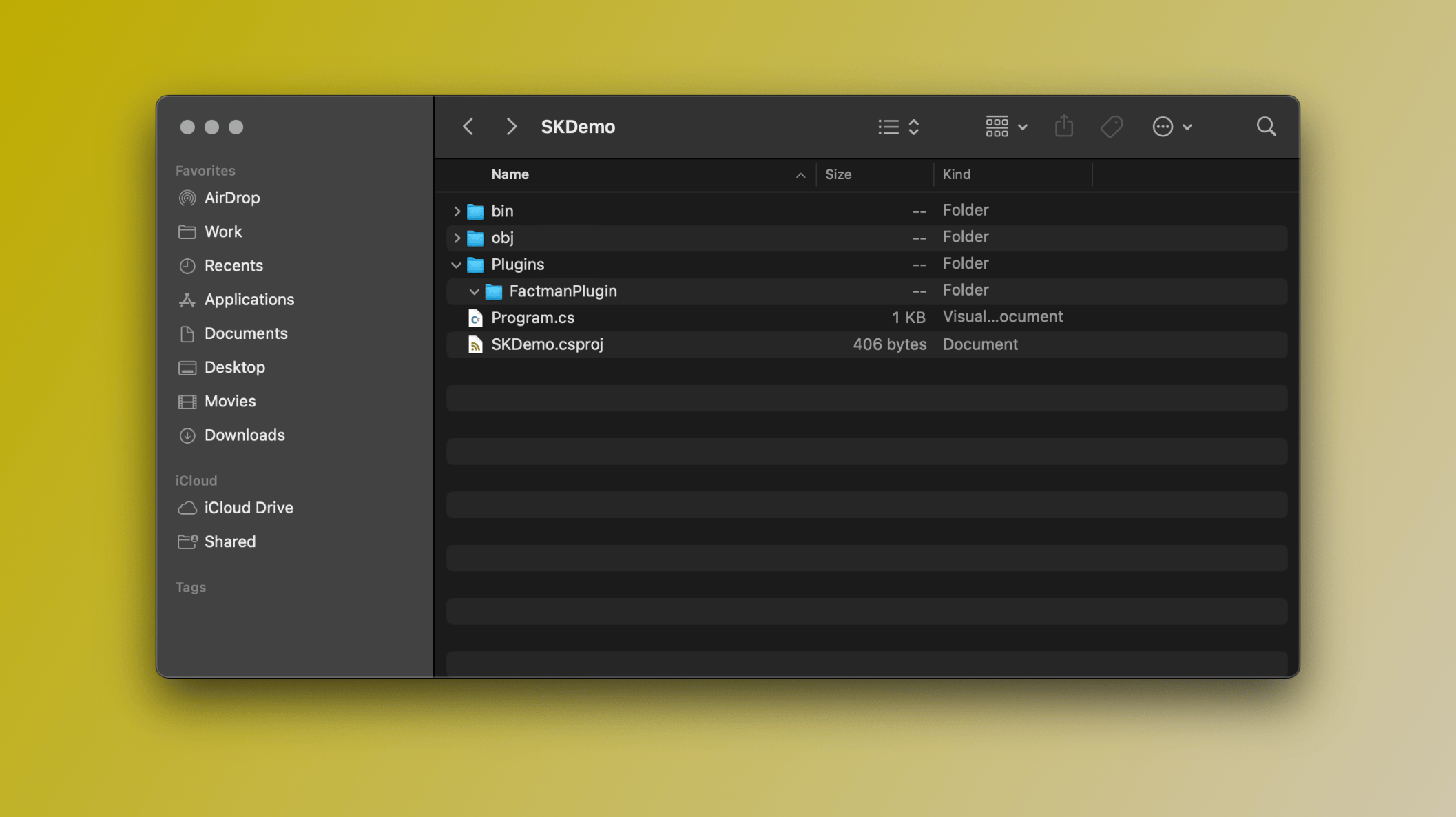

Let's create our Plugins folder, then we'll create another nested folder for our Plugin: FactmanPlugin.

Make sure you're in the root folder SKDemo. Then, in your terminal window type the following:

mkdir Plugins && cd Pluginsmkdir FactmanPlugin && cd FactmanPlugin

Plugins and FactmanPlugin foldersStep 3: Create the FindMyth function

Since we will instruct the LLM and ask it to generate a common myth about AI, this function will be of type Prompt.

- Create

FindMythfolder: While in theFactmanPlugindirectory, type the following:mkdir FindMyth && cd FindMyth

Now, in the FindMyth directory, we'll need two files: skprompt.txt and config.json.

- Create

skprompt.txtfile: This file will contain the natural language prompt for generating AI myths. Create it using the touch command:touch skprompt.txt

The following should go into the skprompt.txt file:

GENERATE A MYTH ABOUT ARTIFICIAL INTELLIGENCE

MYTH MUST BE:

- G RATED

- WORKPLACE AND FAMILY SAFE

- NO SEXISM, RACISM OR OTHER BIAS/BIGOTRY

DO NOT INVENT MYTHS ABOUT REAL PEOPLE OR ORGANIZATIONSPrompt template: Feel free to play around with this to suit your needs

- Create

config.jsonfile: This file will be used for our function’s configuration settings. Create it using the touch command:touch config.json.

The following JSON should go into the config.json file:

{

"schema": 1,

"description": "Find a common myth about AI",

"execution_settings": {

"default": {

"max_tokens": 1000,

"temperature": 0.9,

"top_p": 0.0,

"presence_penalty": 0.0,

"frequency_penalty": 0.0

}

}

}JSON schema definition of our function