What the Heck is MCP? (& what it isn't)

MCP is a new protocol that lets AI models call tools, access APIs, and fetch real-time data while responding to users. Here’s how it works and how it compares to RAG.

On the 25th of November 2024, Anthropic announced the introduction of MCP on their blog titled "Introducing the Model Context Protocol". MCP, as defined by Anthropic, is "A new standard for connecting AI assistants to the systems where data lives, including content repositories, business tools, and development environments."

So what does this mean? First, MCP is a protocol. This means it standardizes how LLMs connect with external data sources. Think of a situation where you want Claude to answer a question using private company data that isn't available on the internet, that's exactly where MCP steps in. It allows the model (such as Claude) to securely request and use that private information in real time.

So how is MCP different from other ways models get information from external sources, like RAG? Let's break it down.

MCP vs. RAG: What's the Difference?

Think of MCP and RAG as two different methods AI models use to get information from external sources but they do it very differently.

RAG (Retrieval-Augmented Generation) commonly turns your prompt into a mathematical representation called a vector. It then uses a method called similarity search to find content in a vector database that is closest in meaning to your query. This works by measuring how semantically similar the stored data is to your prompt.

Once the most relevant information is found, it’s pulled into the model’s context and used to help generate a more informed response. In simple terms, RAG retrieves helpful information, adds it to the model’s existing knowledge, and then generates an answer based on both.

MCP (Model Context Protocol) works more like a smart control panel the model can use while it’s talking to you. Instead of loading all the information up front, the model can call tools, fetch data, or perform actions in real time during the conversation. As it generates a response, the model can recognize when it needs more information or needs to take action, like checking a live database, calling an API, or doing a calculation, and trigger that step immediately. This makes MCP ideal for interactive tasks where the model needs to dynamically access tools or pull in live information on the fly.

The key difference? RAG excels at search-heavy tasks where background knowledge is needed up front and where information is stored and organized in a vector database, while MCP shines in real-time scenarios where the model can interact with services and tools directly throughout the conversation.

Why MCP Matters Now

With the rise of AI agents in 2024 and 2025, there's been an explosion of tools and integrations that AI models need to access. Before MCP, each tool required custom, often rigid connections. MCP provides a unified framework that standardizes how AI models discover and interact with external resources which makes it easier to build sophisticated AI assistants that can work across multiple systems.

Tech giants like Microsoft and Google chose to adopt Anthropic's MCP as the standard for agentic data connections.

Can MCP & RAG Work Together?

Absolutely and in fact they're most powerful when used together. Imagine a customer support system handling a billing issue:

- RAG retrieves relevant company policies and pricing information from stored documentation.

- MCP calls a billing API to fetch the customer's recent invoice data.

- MCP triggers a refund tool if needed.

- RAG provides context about refund policies to explain the process.

In this setup, RAG provides the knowledge foundation while MCP handles the real-time actions, making the AI assistant both well-informed and capable of taking action.

Yeah, I know what you're thinking... We're one step closer toward replacing humans entirely...

MCP vs. API: What's the Difference?

Now that you understand what MCP is and how it works, you can probably guess the answer yourself.

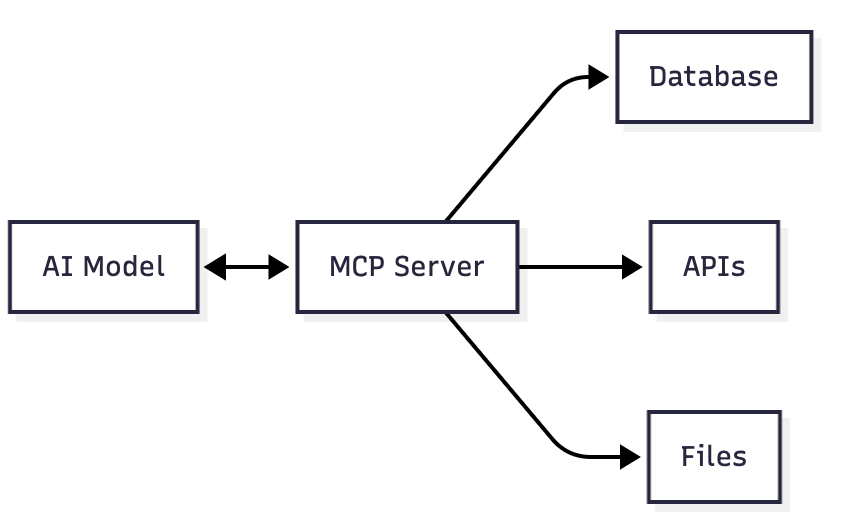

MCP is not an API, it's a protocol that tells a model how to use APIs. Think of it like a blueprint for the model that tells it which tools are available, what they are, what they do and how it can use them.

An MCP server (more on the "server" part below) acts as the bridge between the model and external tools. It provides a structured list of available functions (like APIs, databases, or internal services), along with the input and output formats. The model then relies on MCP to provide it with all the information about available tools in a standard way.

So while an API is the tool itself (e.g. GET /invoice), MCP defines the interface the model uses to discover and call that tool correctly during a conversation.

What is an MCP server?

An MCP server is like a helpful robot at the entrance of a restaurant. Before you even sit down, the robot greets you and explains what you can do inside:

- "Read the menu" = Resources (like GET endpoints for data)

- "Place an order" = Tools (executable functions the AI can call)

- "Ask for the bill" = Also Tools (any action the AI can perform)

Thanks to this robot, you know exactly how to interact with the restaurant and what kinds of requests you can make. In the same way, an AI model talks to an MCP server, which provides all the information it needs to understand how to use available tools and services, what’s possible, what inputs are required, and what to expect in return.

The MCP server then handles any request by the model.

Current Limitations

While MCP is promising, it's still early days. Adoption is limited, and there aren't many MCP servers available yet. Security and authentication standards are still evolving, and building robust MCP servers requires careful consideration of error handling and resource management.

The Bottom Line

MCP represents a significant step toward more standardized, capable AI assistants. Instead of every AI application building custom integrations, MCP provides a common language for AI models to interact with the tools and data they need. Combined with techniques like RAG, it opens up possibilities for AI assistants that are both knowledgeable and capable of taking real-world actions.

The future of AI isn't just about better models, it's about better connections between models and the systems we use every day.